Two years ago, things were different with AI generated images. A quick glance at the hands and you knew what you were dealing with. Six fingers here, fused knuckles there. AI images had that characteristic look, that "something's off here" feeling. The eyes sometimes looked dead, the faces too symmetrical. The lighting almost always too perfect. You could spot it if you knew what to look for.

That time is over.

The development over recent months has been disturbingly rapid. Midjourney, Stable Diffusion, DALL-E — they've all learned. And not just gotten a bit better, but fundamentally changed how they work. What used to be telltale signs are now history.

Take frequency analysis. Real photos have a characteristic energy distribution in the frequency spectrum, mainly in the low-frequency range, smooth transitions, organic structures. Early AI generators produced flat curves with extreme peaks in the high-frequency range, a clear pattern. Modern AI models imitate these natural frequency distributions remarkably well.

Or edges. Tree branches, hair, the texture of knit sweaters — these were AI's Achilles' heels. The transitions looked unnatural, too uniform or too chaotic. Now AI masters complex edge patterns. Individual hairs with varying light reflections, natural irregularities, it's all there.

The symmetry trap doesn't work anymore either. AI generators love symmetry. Natural photos typically show less than 15 percent symmetry (our faces aren't perfectly mirror images, after all), while AI images often clocked in at over 50 percent. But the latest models deliberately integrate asymmetries, small deviations that make an image more lifelike.

Textures were a problem for a long time. Fur across larger areas? No way. Wood grain? Looked like plastic. The algorithms compared adjacent image blocks and searched for abrupt texture changes or overly homogeneous areas. These days, generators handle complex textures with ease.

Then there were grid artifacts. Many models worked in blocks, 8x8 or 16x16 pixels, leading to subtle repetitive patterns. Especially when heavily scaling images up or down, you could expose an AI image this way. That's gone too.

Just like the unnatural smoothness. AI images often had these too-perfect transitions, these unrealistically smooth surfaces, while real photos exhibit natural sensor noise. Noise analysis was a reliable tool. Modern generators, however, deliberately add realistic noise with the right local variance, the right grain.

These patterns have disappeared in AI-generated images. Now what?

The manufacturers claim that they're embedding watermarks. Stable Diffusion has its Stable Signature, cryptographic markers in the frequency domain. Google DeepMind developed SynthID, watermarks that supposedly survive even after compression. Sounds great. Except these watermarks can't be read without the specific decoders of their respective models. The technology exists, but it's not publicly accessible. And thus useless for anyone wanting to check an image's origin.

Then there's C2PA, the Coalition for Content Provenance and Authenticity. Adobe, Microsoft and others developed this standard that's supposed to store origin information and editing history in metadata. Images can be explicitly marked as "trainedAlgorithmicMedia". Is C2PA the solution? As of today, only Adobe Firefly and Microsoft Designer consistently use the standard. The list of non-implementers is significantly longer than the list of supporters.

So what's left?

Professional, paid detection tools like Hive AI or Optic AI use trained neural networks, fed with millions of images. Their success rate is supposedly 80 to 90 percent. Which sounds like a lot, but in practice means: every fifth to tenth image is misidentified.

Please use your brain!

We're back at square one: human judgment. Critical questioning. Unusually perfect details, impossible perspectives, and above all, logical errors. These are the last clues.

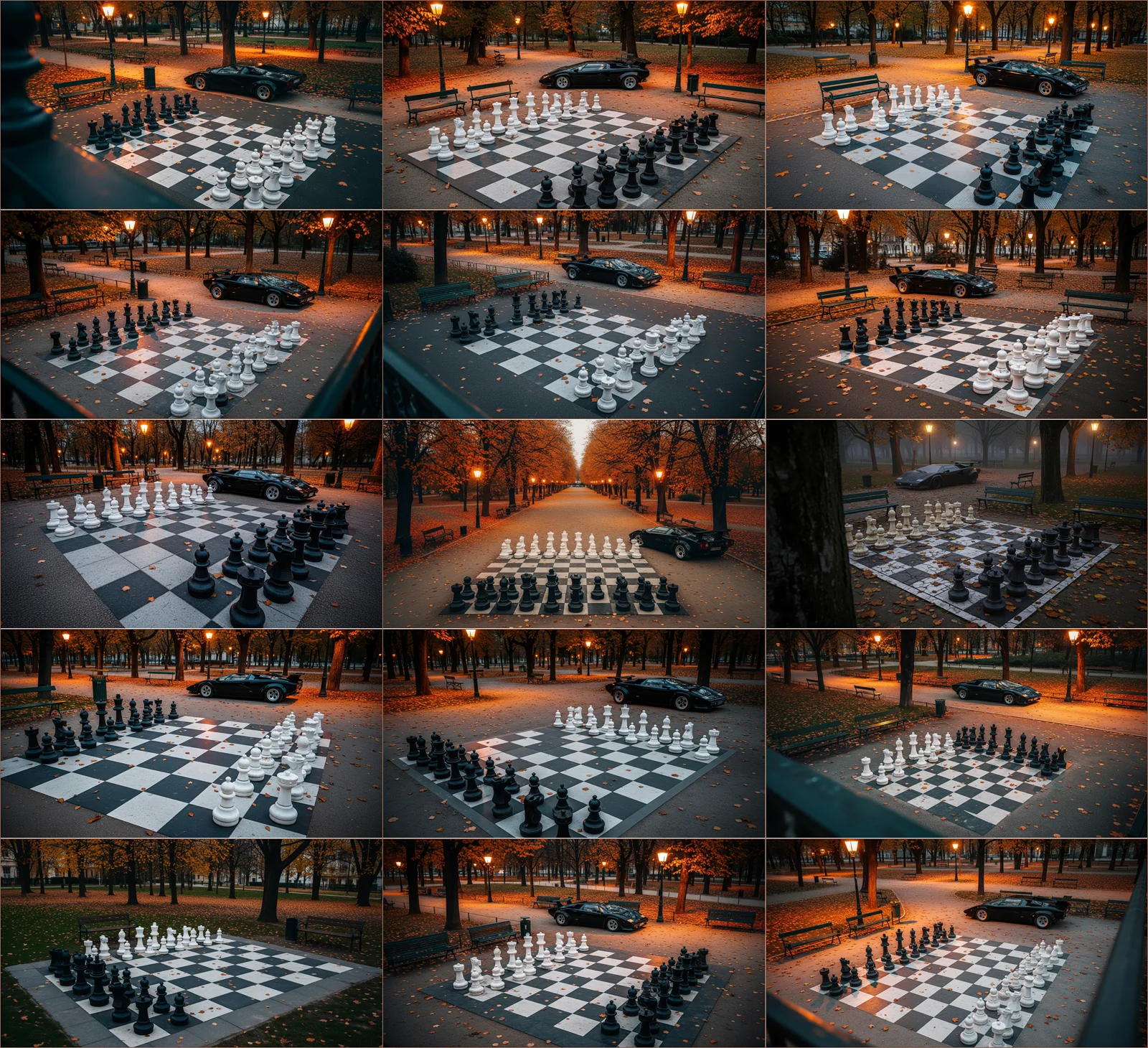

And that there are two kings standing in the center — pieces that don't belong in this configuration? The unusual threesome the AI produced here exposes the image.

My conclusion

For us photographers, this means our work no longer automatically carries the stamp of reality. That we have to explain that our photo is real. That skepticism has become the new normal.

P.S. But when I think about how absurd generated cars looked two years ago. In the motif above, which only took 18 seconds to create at 2K resolution, the Lambo looks as if I had actually driven it to the chessboard in the park. Oh wait, the park doesn't even exist in real life.

And when I move to the next chessboard in the park, it also stubbornly shows 9 squares per row instead of 8 — despite my explicit correction. Even the simple principle of "alternating black and white" proved too much for the AI. When it comes to obstinacy, it's definitely very human.

I'm really impressed that the old patterns are gone and the AI images look pretty realistic. But the logic errors still remain at present. Even after more than 15 attempts: